Java BlockingQueue vs Semaphore: Complete Producer-Consumer Tutorial

Contents

Introduction

The Producer-Consumer problem is everywhere in real programming. We see it in web servers handling requests, message queues processing data, and even in simple file downloaders. While we can use basic synchronized blocks, they often aren’t enough for serious applications.

Think about it – a busy e-commerce site might process thousands of orders per second. A financial trading system needs to handle market data feeds without losing a single tick. These scenarios need something more robust than basic synchronisation.

Today, we’ll explore two powerful solutions: BlockingQueue vs Semaphore. We’ll build working examples, measure their performance, and see when to use each one.

Why synchronized may not be enough?

- Performance Bottlenecks In high-traffic systems handling 10,000+ tasks per second, synchronized blocks become bottlenecks. We’ve seen trading platforms miss market opportunities because threads were waiting in line.

// This becomes slow with many threads

synchronized(lock) {

// Only one thread can do work here

processData();

}- Thread Starvation Some threads might wait forever while others keep getting access. Imagine a priority task queue where high-value transactions need to jump ahead – synchronized blocks can’t handle this elegantly.

- Limited Flexibility Modern architectures use message queues like Kafka and RabbitMQ. These patterns extend across networks, something basic synchronization can’t handle.

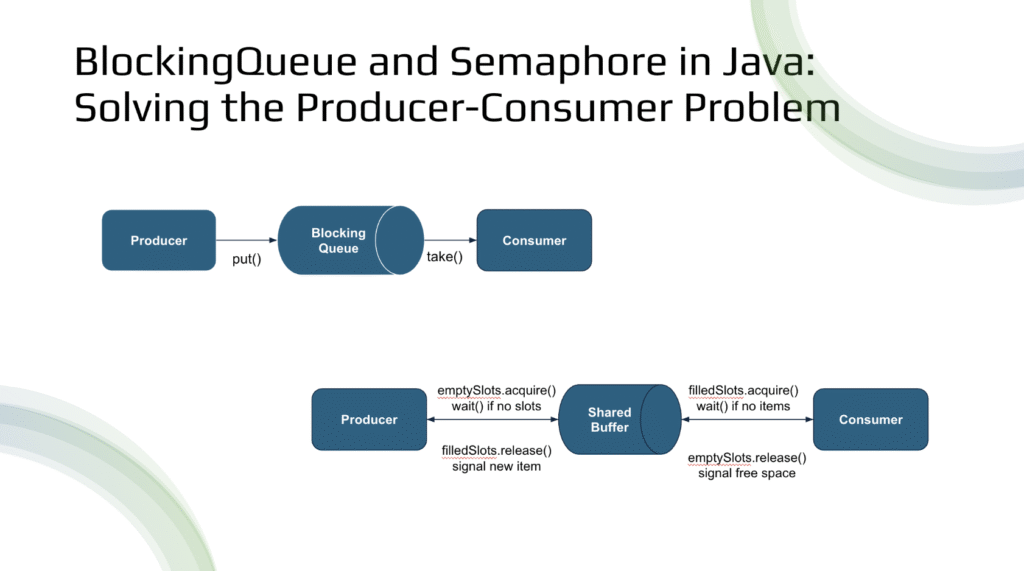

Approach 1: BlockingQueue – The Simple Solution

In Java, the BlockingQueue interface from the java.util.concurrent package provides a high-level, thread-safe abstraction for coordinating data exchange between multiple threads. It is especially useful in producer-consumer scenarios where we need to safely share a bounded buffer between producer and consumer threads.

Here’s what makes it special:

- Automatic blocking: Producers wait when the queue is full

- Consumer blocking: Consumers wait when the queue is empty

- Thread-safe: No race conditions or data corruption

- No busy waiting: Threads sleep instead of spinning

Let’s build an example using ArrayBlockingQueue, a fixed-size thread-safe queue implementation:

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

/**

* BlockingQueue Producer-Consumer Example

*

* This shows how BlockingQueue handles thread coordination automatically.

* No manual synchronization needed!

*/

public class BlockingQueueExample {

private static final int QUEUE_CAPACITY = 5;

private static volatile boolean running = true;

private static long itemsProduced = 0;

private static long itemsConsumed = 0;

public static void main(String[] args) throws InterruptedException {

BlockingQueue<Integer> queue = new ArrayBlockingQueue<>(QUEUE_CAPACITY);

long startTime = System.currentTimeMillis();

// Creates items every 500ms

Thread producer = new Thread(() -> {

int value = 0;

try {

while (running && !Thread.currentThread().isInterrupted()) {

queue.put(value);

itemsProduced++;

System.out.println("[Producer] Created item: " + value + " (Queue size: " + queue.size() + ")");

value++;

// Simulate long running work

Thread.sleep(500);

}

} catch (InterruptedException e) {

System.out.println("Producer interrupted - stopping gracefully");

Thread.currentThread().interrupt();

} finally {

System.out.println("Producer finished. Total items produced: " + itemsProduced);

}

}, "Producer-Thread");

// Consumer Thread: Processes items every 1000ms

Thread consumer = new Thread(() -> {

try {

while (running && !Thread.currentThread().isInterrupted()) {

int data = queue.take();

itemsConsumed++;

System.out.println("[Consumer] Processed item: " + data + " (Queue size: " + queue.size() + ")");

Thread.sleep(1000);

}

} catch (InterruptedException e) {

System.out.println("Consumer interrupted - stopping gracefully");

Thread.currentThread().interrupt();

} finally {

System.out.println("Consumer finished. Total items consumed: " + itemsConsumed);

}

}, "Consumer-Thread");

producer.start();

consumer.start();

Thread.sleep(10000);

running = false;

producer.interrupt();

consumer.interrupt();

producer.join();

consumer.join();

long endTime = System.currentTimeMillis();

System.out.println("\n=== Performance Summary ===");

System.out.println("Total runtime: " + (endTime - startTime) + "ms");

System.out.println("Items produced: " + itemsProduced);

System.out.println("Items consumed: " + itemsConsumed);

System.out.println("Queue final size: " + queue.size());

}

}The following behavior can be observed when running the code:

[Producer] Created item: 0 (Queue size: 1)

[Producer] Created item: 1 (Queue size: 2)

[Consumer] Processed item: 0 (Queue size: 1)

[Producer] Created item: 2 (Queue size: 2)

[Producer] Created item: 3 (Queue size: 3)

[Consumer] Processed item: 1 (Queue size: 2)

[Producer] Created item: 4 (Queue size: 3)

[Producer] Created item: 5 (Queue size: 4)

[Producer] Created item: 6 (Queue size: 5)

[Producer] Created item: 7 (Queue size: 5) // Queue is full - producer waits!

[Consumer] Processed item: 2 (Queue size: 4) // Consumer frees up space

[Producer] Created item: 8 (Queue size: 5) // Producer continuesHow BlockingQueue Works

BlockingQueue uses three key components to coordinate threads:

- ReentrantLock: Provides mutual exclusion (only one thread can modify the queue at a time)

- Condition Variables:

notFull: Producers wait here when queue is fullnotEmpty: Consumers wait here when queue is empty

- Array/LinkedList: The actual storage for items

When the queue is full

If the producer tries to add an item when the queue is full:

→ The producer thread automatically pauses (blocks)

→ It wakes up only when space becomes available

→ No lost items, no crashes

When the queue is empty

If the consumer tries to take an item when empty:

→ The consumer thread automatically pauses

→ It wakes up when new items arrive

→ No busy waiting that wastes CPU

- No Race Conditions: The lock ensures only one thread modifies the queue

- Efficient Waiting: Threads sleep instead of spinning (no CPU waste)

- Automatic Coordination: Producers and consumers wake each other up

- Fair Access: Threads are woken up in order (no starvation)

How BlockingQueue Solves the Producer-Consumer Problem

Different Speed Problem Our producer makes items every 500ms, but the consumer takes 1000ms to process each one. Without BlockingQueue, we’d either lose data or run out of memory. BlockingQueue handles this automatically:

- When the queue fills up, the producer waits

- When the queue empties, the consumer waits

- No data loss, no memory issues

Thread Safety Multiple threads can safely access the queue simultaneously. BlockingQueue uses internal locks and conditions to prevent race conditions.

Efficiency No busy waiting – threads sleep when they can’t proceed, saving CPU cycles.

Approach 2: Semaphore – The Flexible Solution

In Java, the Semaphore class from the java.util.concurrent package provides a flexible way to control access to shared resources using “permits“. While BlockingQueue abstracts away the low-level synchronisation, using Semaphores gives us full control over the coordination logic.

We’ll use three components:

- emptySlots: Tracks available space (starts at buffer size)

- filledSlots: Tracks available items (starts at 0)

- bufferLock: Ensures only one thread modifies the buffer at a time

import java.util.LinkedList;

import java.util.Queue;

import java.util.concurrent.Semaphore;

/**

* Semaphore Producer-Consumer Example

*

* This shows manual thread coordination using semaphores.

* More control, but more complexity!

*/

public class SemaphoreExample {

private static final int BUFFER_SIZE = 5;

private static volatile boolean running = true;

private static final Queue<Integer> buffer = new LinkedList<>();

private static final Object bufferLock = new Object();

private static final Semaphore emptySlots = new Semaphore(BUFFER_SIZE);

private static final Semaphore filledSlots = new Semaphore(0);

private static long itemsProduced = 0;

private static long itemsConsumed = 0;

public static void main(String[] args) throws InterruptedException {

long startTime = System.currentTimeMillis();

Thread producer = new Thread(() -> {

int value = 0;

try {

while (running && !Thread.currentThread().isInterrupted()) {

// Wait for empty slot

emptySlots.acquire();

// Add item to buffer (synchronized for thread safety)

synchronized (bufferLock) {

buffer.add(value);

itemsProduced++;

System.out.println("[Producer] Created item: " + value + " (Buffer size: " + buffer.size() + ", Empty slots: " + emptySlots.availablePermits() + ")");

value++;

}

// Signal that new item is available

filledSlots.release();

Thread.sleep(500);

}

} catch (InterruptedException e) {

System.out.println("Producer interrupted - stopping gracefully");

Thread.currentThread().interrupt();

} finally {

System.out.println("Producer finished. Total items produced: " + itemsProduced);

}

}, "Producer-Thread");

Thread consumer = new Thread(() -> {

try {

while (running && !Thread.currentThread().isInterrupted()) {

// Wait for available item

filledSlots.acquire();

// Remove item from buffer

int data;

synchronized (bufferLock) {

data = buffer.remove();

itemsConsumed++;

System.out.println("[Consumer] Processing item: " + data + " (Buffer size: " + buffer.size() + ", Filled slots: " + filledSlots.availablePermits() + ")");

}

// Signal that slot is now empty

emptySlots.release();

Thread.sleep(1000);

System.out.println("[Consumer] Finished processing item: " + data);

}

} catch (InterruptedException e) {

System.out.println("Consumer interrupted - stopping gracefully");

Thread.currentThread().interrupt();

} finally {

System.out.println("Consumer finished. Total items consumed: " + itemsConsumed);

}

}, "Consumer-Thread");

producer.start();

consumer.start();

Thread.sleep(10000);

running = false;

producer.interrupt();

consumer.interrupt();

producer.join();

consumer.join();

long endTime = System.currentTimeMillis();

System.out.println("\n=== Performance Summary ===");

System.out.println("Total runtime: " + (endTime - startTime) + "ms");

System.out.println("Items produced: " + itemsProduced);

System.out.println("Items consumed: " + itemsConsumed);

System.out.println("Buffer final size: " + buffer.size());

}

}When the code is executed, we observe the following:

[Producer] Created item: 0 (Buffer size: 1, Empty slots: 4)

[Producer] Created item: 1 (Buffer size: 2, Empty slots: 3)

[Consumer] Processing item: 0 (Buffer size: 1, Filled slots: 0)

[Producer] Created item: 2 (Buffer size: 2, Empty slots: 3)

[Consumer] Finished processing item: 0

[Producer] Created item: 3 (Buffer size: 3, Empty slots: 2)

[Consumer] Processing item: 1 (Buffer size: 2, Filled slots: 1)

[Producer] Created item: 4 (Buffer size: 3, Empty slots: 2)

[Producer] Created item: 5 (Buffer size: 4, Empty slots: 1)

[Consumer] Finished processing item: 1

[Producer] Created item: 6 (Buffer size: 5, Empty slots: 0)

[Producer] Created item: 7 (Buffer size: 5, Empty slots: 0)

[Consumer] Processing item: 2 (Buffer size: 4, Filled slots: 2)

[Producer] Created item: 8 (Buffer size: 5, Empty slots: 0)

[Consumer] Finished processing item: 2

=== Performance Summary ===

Total runtime: 10087ms

Items produced: 18

Items consumed: 9

Buffer final size: 4How Semaphore Coordination Works

- Producer wants to add item:

- Calls

emptySlots.acquire()– waits if buffer is full - Locks buffer and adds item

- Calls

filledSlots.release()– signals consumer

- Calls

- Consumer wants to take item:

- Calls

filledSlots.acquire()– waits if buffer is empty - Locks buffer and removes item

- Calls

emptySlots.release()– signals producer

- Calls

This creates a perfect dance – producers and consumers coordinate automatically!

Key Observations:

- Permit Tracking: We can see exactly how many empty slots remain

- Buffer Management: Buffer size changes as items are added/removed

- Automatic Blocking: When empty slots reach 0, producer waits

- Performance: Producer creates ~18 items, consumer processes ~9 (due to speed difference)

BlockingQueue vs Semaphore: When to Use What

| Feature | BlockingQueue | Semaphore Approach |

|---|---|---|

| Ease of Use | Simple API – just put() and take() | More complex, needs manual control |

| Synchronization | Automatic, thread-safe | Manual (using synchronized + semaphores) |

| Buffer Management | Built-in (no manual lock or queue needed) | Custom queue + semaphores + locking needed |

| Flexibility | Limited to queue semantics | Very flexible; can model other patterns |

| Performance | Highly optimized internally | Slightly slower due to manual coordination |

| Learning Curve | Low (ideal for beginners) | Moderate (needs understandin g of concurrency concepts) |

| Memory | Efficient, built-in buffer management | Need to manage buffer ourselves |

Real-World Examples

Web Server Request Processing

When building a web server, we need to handle incoming HTTP requests efficiently. We can’t process requests immediately because database queries or API calls might take time. Here’s where BlockingQueue work well:

BlockingQueue<HttpRequest> requestQueue = new ArrayBlockingQueue<>(1000);

// Producer: HTTP listener

while (serverRunning) {

HttpRequest request = acceptConnection();

requestQueue.put(request); // Blocks if queue is full (backpressure)

}

// Consumer: Worker threads

while (workerRunning) {

HttpRequest request = requestQueue.take(); // Blocks if empty

processRequest(request);

}When traffic spikes, the queue fills up and naturally creates backpressure. The server stops accepting new connections temporarily, preventing memory overflow and maintaining response quality.

Database Connection Pool

Database connections are expensive resources. We can’t create unlimited connections, so we use Semaphore to control access:

// Using Semaphore for connection limits

Semaphore connectionLimit = new Semaphore(10); // Max 10 connections

public Connection getConnection() {

connectionLimit.acquire(); // Wait for available connection

return createConnection();

}

public void releaseConnection(Connection conn) {

closeConnection(conn);

connectionLimit.release(); // Free up permit

}The semaphore ensures we never exceed database limits. When all 10 connections are in use, new requests wait automatically. This prevents database overload and connection errors.

Common BlockingQueue and Semaphore Interview Questions

Here are some frequently asked interview questions and concepts related to both:

1. How does BlockingQueue handle thread synchronisation internally?

BlockingQueue uses a ReentrantLock for mutual exclusion and two Condition variables:

notFull: Producers wait here when queue is fullnotEmpty: Consumers wait here when queue is empty

When a producer adds an item, it signals notEmpty. When a consumer removes an item, it signals notFull.

2. What happens if multiple producers and consumers use the same BlockingQueue?

The queue remains thread-safe due to locks and conditions. However, the execution order is depends on JVM thread scheduling.

3. Can BlockingQueue cause a deadlock? If yes, how?

Generally no, because it uses proper lock ordering and condition waiting. Deadlocks can occur if you hold other locks while calling blocking methods:

// DANGER: Potential deadlock

synchronized(lockA) {

synchronized(lockB) {

queue.put(item); // If this blocks, we're holding two locks!

}

}4. What’s the difference between put()/take() and offer()/poll()?

put()blocks until space is availabletake()blocks until an item is availableoffer()returns false immediately if queue is fullpoll()returns null immediately if queue is empty

5. How would you implement a BlockingQueue from scratch?

Key components:

- A Queue (like LinkedList) for storage

- A ReentrantLock for thread safety

- Two Condition variables (notFull, notEmpty)

- put() waits on notFull, signals notEmpty

- take() waits on notEmpty, signals notFull

6. Can a Semaphore cause a deadlock? How?

Yes, if threads acquire semaphores in different orders:

// Thread 1: acquire A, then B

semaphoreA.acquire();

semaphoreB.acquire();

// Thread 2: acquire B, then A

semaphoreB.acquire();

semaphoreA.acquire(); // DEADLOCK!If both threads hold one semaphore and wait for the other, a deadlock occurs. Always acquire semaphores in the same order across all threads.

7. How would you implement a producer-consumer problem using Semaphore?

- Use two counting semaphores:

emptySlots(initialised to buffer size)filledSlots(initialised to0)

- Producer: acquire

emptySlots, add item to buffer, releasefilledSlots. - Consumer: acquire

filledSlots, remove item from buffer, releaseemptySlots.

8. Can you use a single Semaphore instead of two for producer-consumer?

A single semaphore can’t track both empty and filled slots. We need separate counting for two different conditions – just like BlockingQueue uses two Condition variables.

Conclusion

We’ve covered two powerful approaches to the Producer-Consumer problem:

- BlockingQueue: Simple, efficient, perfect for most use cases

- Semaphore: Flexible, controllable, great for custom patterns

For most applications, start with BlockingQueue. It’s battle-tested, optimized, and handles the complexity for you. Move to Semaphore only when you need the extra control.